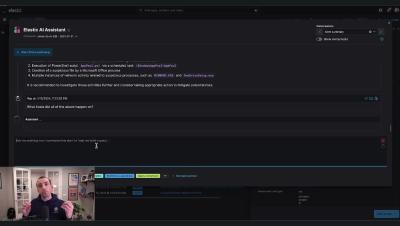

Deploying the Droids: Optimizing Charlotte AI's Performance with a Multi-AI Architecture

Over the last year there has been a prevailing sentiment that while AI will not necessarily be replacing humans, humans who use AI will replace those that don’t.