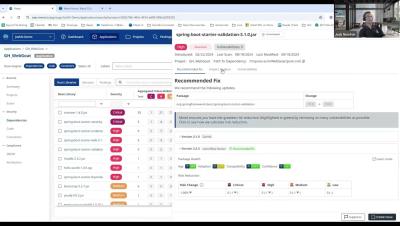

Mend Renovate Enterprise Cloud: Dependency Updates at Scale

If there’s one thing development and security teams can agree on, it’s that updating dependencies is a worthwhile endeavor. Keeping open-source dependencies up to date reduces bugs—both now and in the long run. And whether those bugs are security vulnerabilities or functional issues, everyone is happy to see them go.