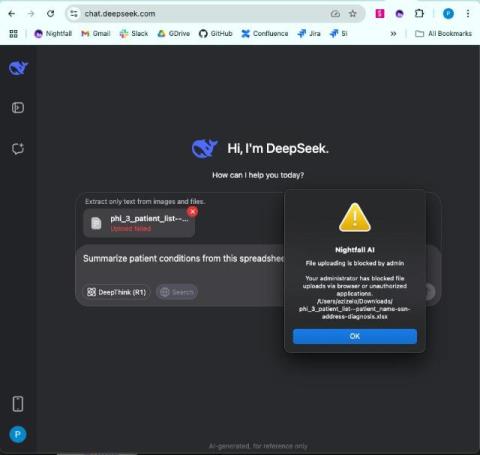

How to Prevent Sensitive Data Exposure to AI Chatbots Like DeepSeek

With the rise of AI chatbots such as DeepSeek, organizations face a growing challenge: how do you balance innovative technology with robust data protection? While AI promises to boost productivity and streamline workflows, it can also invite new risks. Sensitive data—whether it’s customer payment information or proprietary research—may inadvertently end up in the prompts or outputs of AI models.